1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

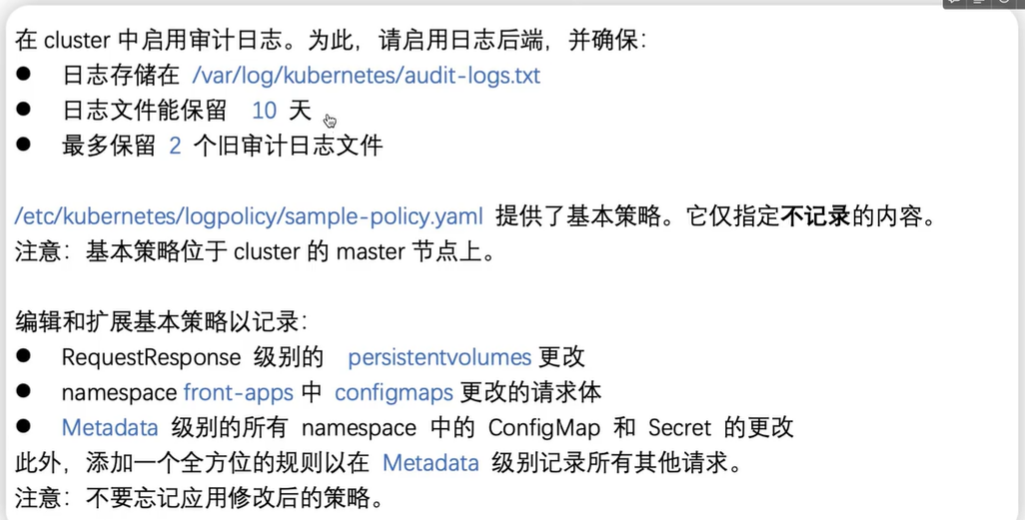

$ vim /etc/kubernetes/logpolicy/sample-policy.yaml

apiVersion: audit.k8s.io/v1

kind: Policy

omitStages:

- "RequestReceived"

rules:

- level: RequestResponse

resources:

- group: ""

resources: ["persistentvolumes"]

- level: Request

resources:

- group: ""

resources: ["configmaps"]

namespaces: ["front-apps"]

- level: Metadata

resources:

- group: ""

resources: ["secrets", "configmaps"]

- level: Metadata

omitStages:

- "RequestReceived"

$ vim /etc/kubernetes/manifests/kube-apiserver.yaml

- --audit-log-path=/var/log/kubernetes/audit-log.txt

- --audit-log-maxage=10

- --audit-log-maxbackup=2

- --audit-policy-file=/etc/kubernetes/logpolicy/sample-policy.yaml

$ systemctl daemon-reload

$ systemctl restart kubelet

$ kubectl get pod -A

$ tail -f /var/log/kubernetes/audit-log.txt

|